I have a project which has a PC-side program issuing commands over a virtual COM port to a Teensy3 (handled by Serial on the device side). The commands trigger some I2C activity, and return a byte of data indicating error status (Slave not responding, and such). On the PC-side it will send a command, await the error byte, then immediately send the next command. There are no delays on the PC-side other than presumably some OS scheduling for USB traffic.

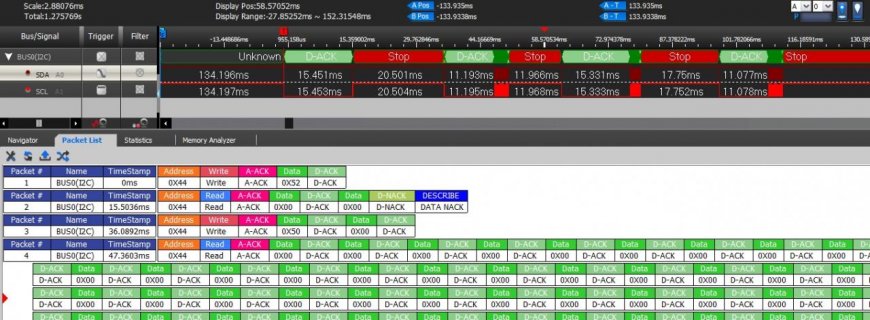

I noticed on my device that the commands are slower than expected, and each command appears to have ~100ms delay between it. I'm trying to locate the source of these 100ms delays. Here is an example picture of what I'm seeing - it is a series of commands, you can see that each burst of I2C activity is separated from the rest by anywhere from 102ms to 115ms:

My guess is that this is due to the USB traffic. I've done some low-level USB work before, and I know some devices can have descriptors set to indicate a polling rate, but I've not pulled apart the Serial code to figure out what is going on.

Is Serial the problem here? and if so is there anything I can tweak to modify this behavior?

I noticed on my device that the commands are slower than expected, and each command appears to have ~100ms delay between it. I'm trying to locate the source of these 100ms delays. Here is an example picture of what I'm seeing - it is a series of commands, you can see that each burst of I2C activity is separated from the rest by anywhere from 102ms to 115ms:

My guess is that this is due to the USB traffic. I've done some low-level USB work before, and I know some devices can have descriptors set to indicate a polling rate, but I've not pulled apart the Serial code to figure out what is going on.

Is Serial the problem here? and if so is there anything I can tweak to modify this behavior?