Hi guys,

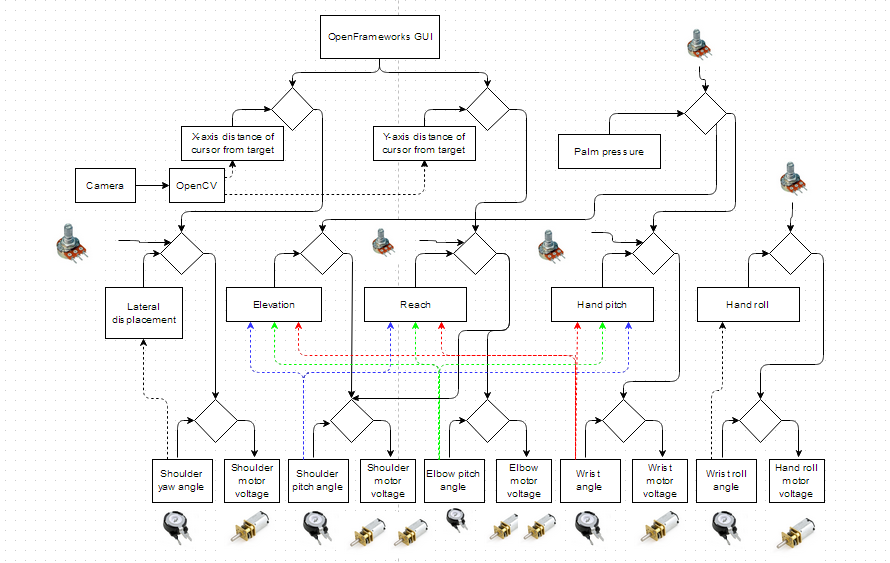

I made a robot arm based on principles of perceptual control theory, developed by W.T. Powers. As the title says, the arm can position the endpoint in space without the use of inverse kinematics. Instead, it uses a cascade, or a hierarchy of feedback loops. Positioning is not terribly precise, but I guess it can be improved.

The arm has 5 degrees of freedom. It is made from a 5mm foam plastics board (aka forex plastics). Each joint has one or two geared micro motors, and a potentiometer which measures angular position of the joint. A Teensy 3.1 board is reading out potentiometer values, doing the calculations, and sending outputs to TB6612FNG motor drivers. The setup is operating at 12 V.

Calculations on the Teensy involve two levels of feedback loops. First level is simple proportional control for each joint angle. Inputs for the second level are calculated using basic trigonometric functions and represent reach, elevation and lateral displacement of the end point, as well as hand roll and hand pitch. Outputs of these second order loops are directly varying setpoints of first order loops.

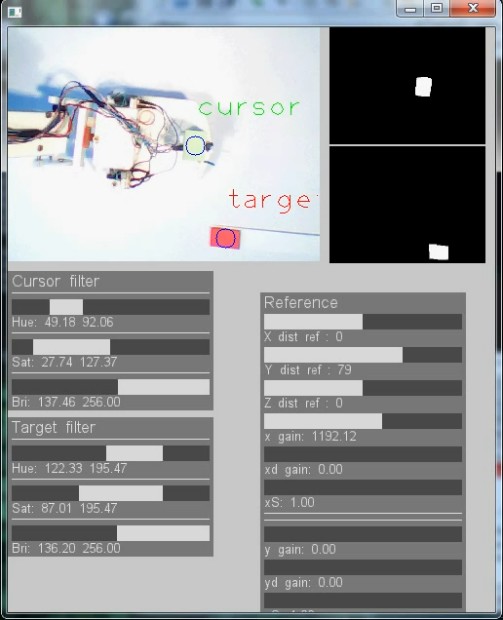

Here is the complete source code, it is still in production since I'm trying to add 'touch' perception to the hand, and there will be a simple visual system using openCV. I'll post that when it's done.

View attachment ArmTeensy.ino

View attachment Kinematics.ino

Structure of the feedback loops is explained in this paper:

http://www.livingcontrolsystems.com/demos/arm_one/arm_one_win_calc.pdf

There is full simulation with source code in Delphi here: http://www.billpct.org/

I love the Teensy 3.1 board. I'm a newb in electronics and I've fried two Maple boards on this project, and the Arduino is a bit too slow. 5V tolerant inputs are really helpful.

I made a robot arm based on principles of perceptual control theory, developed by W.T. Powers. As the title says, the arm can position the endpoint in space without the use of inverse kinematics. Instead, it uses a cascade, or a hierarchy of feedback loops. Positioning is not terribly precise, but I guess it can be improved.

The arm has 5 degrees of freedom. It is made from a 5mm foam plastics board (aka forex plastics). Each joint has one or two geared micro motors, and a potentiometer which measures angular position of the joint. A Teensy 3.1 board is reading out potentiometer values, doing the calculations, and sending outputs to TB6612FNG motor drivers. The setup is operating at 12 V.

Calculations on the Teensy involve two levels of feedback loops. First level is simple proportional control for each joint angle. Inputs for the second level are calculated using basic trigonometric functions and represent reach, elevation and lateral displacement of the end point, as well as hand roll and hand pitch. Outputs of these second order loops are directly varying setpoints of first order loops.

Here is the complete source code, it is still in production since I'm trying to add 'touch' perception to the hand, and there will be a simple visual system using openCV. I'll post that when it's done.

View attachment ArmTeensy.ino

View attachment Kinematics.ino

Structure of the feedback loops is explained in this paper:

http://www.livingcontrolsystems.com/demos/arm_one/arm_one_win_calc.pdf

There is full simulation with source code in Delphi here: http://www.billpct.org/

I love the Teensy 3.1 board. I'm a newb in electronics and I've fried two Maple boards on this project, and the Arduino is a bit too slow. 5V tolerant inputs are really helpful.