PaulStoffregen

Well-known member

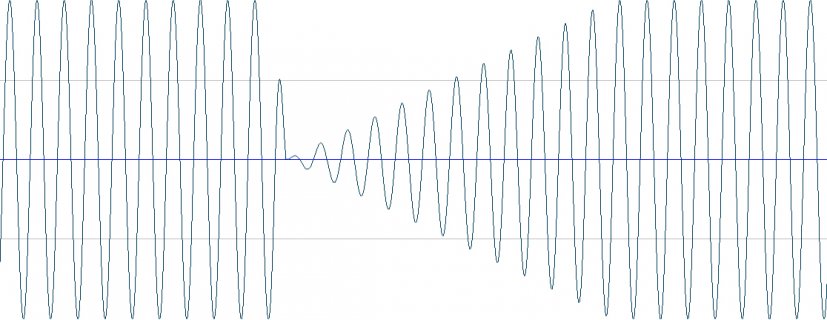

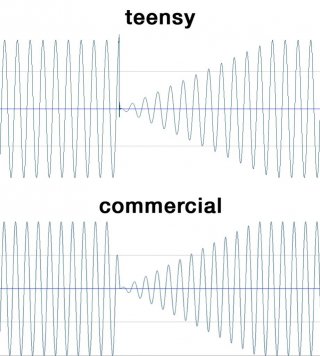

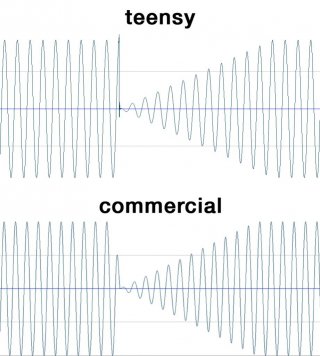

But the question remains, what *should* the envelope object actually do if you end the note and then immediately begin the next? Do you understand this question?

The envelope object is already passing the sine wave with the gain for sustain (which you set to 1.0). So if you end the note and begin another, what waveform do you expect to appear at the output?

The envelope object is already passing the sine wave with the gain for sustain (which you set to 1.0). So if you end the note and begin another, what waveform do you expect to appear at the output?