Say I have the following:

Code:

uint32_t X = Z/Y;

uint32_t F = Z-(Y*X);

Can these two be optimised.

A C89/C90/C99/C11 compiler will calculate

X using integer division, and thus

F = Z % Y (where

% is the integer modulus operator in C).

Some architectures (i386 and AMD64, i.e. 32-bit and 64-bit Intel/AMD) have an integer divide instruction that yields the remainder as well, and many compilers have optimizations to combine a division and remainder into one. The C89/C99

div() function is intended as an optimization for this particular case. It returns a

div_t structure, which contains both the quotient (result) (in

quot member) and the remainder (in

rem member of the

div_t structure).

Unfortunately, while the Cortex-M4 (Teensy 3.x) does have an integer division operation as part of the Thumb instruction set, it only computes the quotient, rounding towards zero.

Comparing hand-written and C versions of

udiv() and

sdiv():

Code:

typedef struct {

unsigned int quot;

unsigned int rem;

} udiv_t;

typedef struct {

int quot;

int rem;

} sdiv_t;

static inline __attribute__((always_inline))

udiv_t udiv_c(const unsigned int num, const unsigned int denom)

{

register udiv_t result;

result.quot = num / denom;

result.rem = num - result.quot * denom;

return result;

}

static inline __attribute__((always_inline))

sdiv_t sdiv_c(const int num, const int denom)

{

register sdiv_t result;

result.quot = num / denom;

result.rem = num - result.quot * denom;

return result;

}

static inline __attribute__((always_inline))

udiv_t udiv_asm(const unsigned int num, const unsigned int denom)

{

register udiv_t result;

__asm__ __volatile__ ( "udiv %0, %2, %3\n\t"

"mul %1, %0, %3\n\t"

"sub %1, %2, %1\n\t"

: "+r" (result.quot) /* %0 */

,"+r" (result.rem) /* %1 */

: "r" (num) /* %2 */

,"r" (denom) /* %3 */

);

return result;

}

static inline __attribute__((always_inline))

sdiv_t sdiv_asm(const int num, const int denom)

{

register sdiv_t result;

__asm__ __volatile__ ( "sdiv %0, %2, %3\n\t"

"mul %1, %0, %3\n\t"

"sub %1, %2, %1\n\t"

: "+r" (result.quot) /* %0 */

,"+r" (result.rem) /* %1 */

: "r" (num) /* %2 */

,"r" (denom) /* %3 */

);

return result;

}

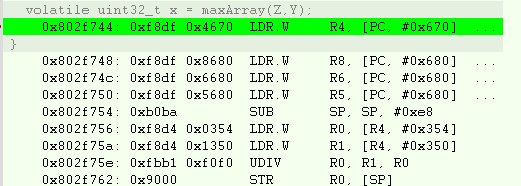

using

arm-none-eabi-gcc-4.9.3 -mthumb -mcpu=cortex-m4 -Os and

arm-none-eabi-gcc-4.9.3 -mthumb -mcpu=cortex-m4 -O2, we find that the code generated from the C version is actually superior (due to smaller function pre/postamble).

Further experimentation shows that as long as you have the two operations nearby -- first computing the quotient, followed soon enough by computing the remainder, either via the

% modulus operator, or by substracting the product of the quotient and denominator from the numerator (both compiling to the exact same machine code) --, GCC-4.9.3 compiles very good code using

-mthumb -mcpu=cortex-m4 -Os and

-mthumb -mcpu=cortex-m4 -O2; certainly good enough for me to

not write inline assembly variants!

The ARM (non-Thumb) instruction set part of cortex-M4 does not have any division operator, so if you do not allow for Thumb instructions, GCC must use a helper function (

__aeabi_uidiv() for

unsigned int arguments) instead. Teensy LC uses Cortex-M0+ (armv6-m architecture for GCC), and it does not have a hardware divide instruction; division by a variable is computed using such a library helper function instead.

In general, the code quoted from Xenoamor's post will compile to good code for Teensy LC, too, although due to the lack of division instruction in the machine code, it'll have to call to the library division function.

TL;DR:

GCC will generate pretty optimized code for those expressions, but you must tell it the exact features Teensy supports via compiler options.

If you are using fixed-point arithmetic on Cortex-M4 (Teensy 3.x), you should note that it does have a machine instruction for multiplying two 32-bit values yielding a 64-bit result. For example,

Code:

unsigned int umulhi(const unsigned int a, const unsigned int b)

{

return ((unsigned long long)a * (unsigned long long)b) >> 32;

}

unsigned int umulhiadd(const unsigned int a, const unsigned int b, const unsigned long long c)

{

return ((unsigned long long)a * (unsigned long long)b + c) >> 32;

}

int smulhi(const int a, const int b)

{

return ((long long)a * (long long)b) >> 32;

}

int smulhiadd(const int a, const int b, const long long c)

{

return ((long long)a * (long long)b + c) >> 32;

}

compiles to (using

-mthumb -mcpu=cortex-m4 -O2 or

-Os):

Code:

umulhi:

umull r0, r1, r0, r1

mov r0, r1

bx lr

umulhiadd:

umlal r2, r3, r1, r0

mov r0, r3

bx lr

smulhi:

smull r0, r1, r0, r1

mov r0, r1

bx lr

smulhiadd:

smlal r2, r3, r1, r0

mov r0, r3

bx lr

which is optimal, as far as I can tell.

This means that instead of division, you should check if you can do the operation via multiplication (by 4294967296/

divisor), optionally adding a 64-bit constant, and shifting the result down by 32 bits (logically the same as dividing by 4294967296). Among other code, this optimizes to just one umull, umlal, smull, or smlal Thumb instruction, taking only one clock cycle (according to Cortex-M4 technical reference manual). In typical code, the bit shift is "hidden" by consecutive code, by just using the high 32-bit word of the result, ignoring the low part.

The types of the multiplicands are important: If and only if both are unsigned, the result is unsigned. At least one has to be cast to 64-bit (

long long or

[u]int64_t); the other will be automatically promoted, but I personally prefer to cast both, just to be explicit. You do want to use a cast instead of specifying the parameters as 64-bit, so that the compiler knows that the arguments are just 32-bit, and only the result is 64-bit.

Apologies for the excess verbosity.