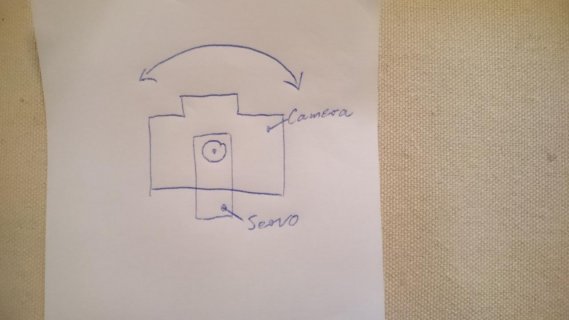

OK, then as soon as you give the servo a position input that differs from its current position, it will start moving towards that position. How exactly it does this depends on its internal controller, which is most probably a proportional controller tuned in some or the other way. It will accelerate in the right direction until it is idling (moving at a constant angular rate, that is, because the camera has been accelerated and then moves on due to its inertia) and then eventually start to decelerate just before it reaches the desired position corresponding to your input. When it has reached that it might overshoot and swing a bit, especially with a high inertia attached to it.

So whatever you implement in your acceleration algorithm, you might see some "rattling" because the camera moves to intermediate positions and decelerates before your algorithm can give the servo a new one. If your algorithm accelerates too fast, the servo will simply not see any ramp-up of your position command and move to the last position given, regardless of any preset speed you're trying to get by software. Your algorithm should operate between these extremes in order to have any smoothing effect.

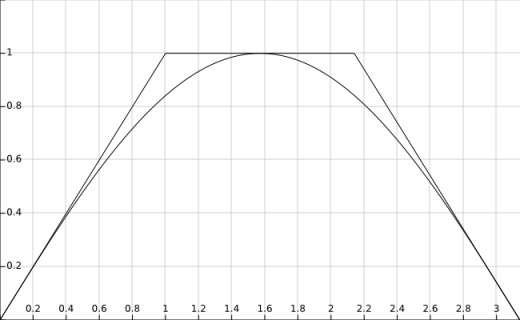

With an algorithm that is executed every 20 ms (the usual update rate of servo signals), the simplest you can do is keep velocity and speed as state variables. Then, in every iteration, adjust the speed to get a bit closer to the desired speed. The difference between old and new speed is the acceleration. Add the speed to the current position and output that to the servo. You have two parameters that can be adjusted to work well with your servo/camera combo: acceleration and speed. If you get both right, this might result in very smooth movement.

You can also simplify it a bit and work with a fixed speed. In that case, if your position variable is not what you want it to be, simply add some value to it until it has reached the desired value:

Code:

(every 20 ms) {

if(x < target)

{

x = min(target, x + velocity)

}

else if(x > target)

{

x = max(target, x - velocity)

}

servo_out(x)

}

Rephrasing it: Your servo is a rabbit and you have the carrot. You need to figure out how to move the carrot forward to keep it within a given distance interval from the rabbit. The fact that the servo has hard speed limit makes this a pretty non-linear system that is hard to control. Unfortunately.