I published a new class library on Github for reading the AMS TSL1402R linear photodiode array, and sending the sensor pixel data over USB serial to a Processing visualization sketch.

https://github.com/Mr-Mayhem/Read-TSL1402R-Optical-Sensor-using-Teensy-3.x

It is complete with Arduino and Processing sketch examples.

This is my first GitHub posting, so bear with me if it's a little wacky one way or the other.

Please add it to Teensy libraries index after it passes muster.

It's fairly optimized for speed, because it combines the sensor's parallel read out feature with the Teensy ADC library's simultaneous read of two pixels at a time,

rather than sequential read of the two pixels as done by many other examples.

And that's way faster than the sensor's serial read out circuit which reads one pixel at a time, of course.

Another speed improvement is, I send the data as binary; rather than each sensor value being sent as a string of characters,

I am using byte pairs for each sensor reading, and a unique byte prefix/delimiter for parsing and sync.

I got away with this approach without data falsifying the sync byte, by using a bit shift strategy, taking advantage of 12 bits of data in a 16 bit byte-pair.

By shifting prior to sending, no data byte ever equals the sync byte value of 255. I shift back after receive.

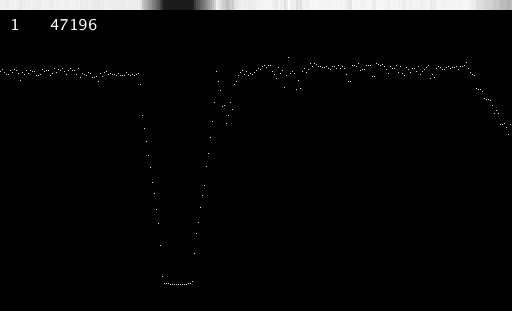

The thing runs pretty fast, like 250 to 300 frames (complete sensor reads of 256 pixels) per second.

(Speed is important when the data serves as part of a feedback loop for a machine, etc. as in my case where I use this to measure stuff live on a moving cnc machine)

It's to the point that even though the Processing sketch is fairly well stripped-down for speed, it still can't keep up with the data stream;

I had to insert a delay in the Arduino loop to slow down the Teensy 3.6 frame rate; my serial AvailableBytes display

(shown in the top right of the Processing window) kept increasing and latency kept growing!

This is a good thing. I will try to write a C++ visualizer soon and see how fast that can go.

I developed it on Teensy 3.6, but it should work on any 3.x with appropriate pin changes.

The ADC pins must be selected from particular pin groups, so that each of the two ADC inputs use a separate internal ADC.

It won't work with the two analog inputs sharing the same internal hardware ADC.

I used a Teensy 3.6 pinout card image from the forums which was edited to showing which pins use which ADC.

https://forum.pjrc.com/threads/34808-K66-Beta-Test?p=114102&viewfull=1#post114102

I am working on an extended version that sends the data to an ESP8266 via SPI, which then sends it over WiFi to a PC.

After that, I forsee bringing an ESP32 into the mix.

A lot of the code for extra features on the Processing sketch is commented out, to make it run faster.

I will add a full featured Processing sketch shortly, with all the bells and whistles turned on.

I will be happy to answer questions and tweak things as needed.

https://github.com/Mr-Mayhem/Read-TSL1402R-Optical-Sensor-using-Teensy-3.x

It is complete with Arduino and Processing sketch examples.

This is my first GitHub posting, so bear with me if it's a little wacky one way or the other.

Please add it to Teensy libraries index after it passes muster.

It's fairly optimized for speed, because it combines the sensor's parallel read out feature with the Teensy ADC library's simultaneous read of two pixels at a time,

rather than sequential read of the two pixels as done by many other examples.

And that's way faster than the sensor's serial read out circuit which reads one pixel at a time, of course.

Another speed improvement is, I send the data as binary; rather than each sensor value being sent as a string of characters,

I am using byte pairs for each sensor reading, and a unique byte prefix/delimiter for parsing and sync.

I got away with this approach without data falsifying the sync byte, by using a bit shift strategy, taking advantage of 12 bits of data in a 16 bit byte-pair.

By shifting prior to sending, no data byte ever equals the sync byte value of 255. I shift back after receive.

The thing runs pretty fast, like 250 to 300 frames (complete sensor reads of 256 pixels) per second.

(Speed is important when the data serves as part of a feedback loop for a machine, etc. as in my case where I use this to measure stuff live on a moving cnc machine)

It's to the point that even though the Processing sketch is fairly well stripped-down for speed, it still can't keep up with the data stream;

I had to insert a delay in the Arduino loop to slow down the Teensy 3.6 frame rate; my serial AvailableBytes display

(shown in the top right of the Processing window) kept increasing and latency kept growing!

This is a good thing. I will try to write a C++ visualizer soon and see how fast that can go.

I developed it on Teensy 3.6, but it should work on any 3.x with appropriate pin changes.

The ADC pins must be selected from particular pin groups, so that each of the two ADC inputs use a separate internal ADC.

It won't work with the two analog inputs sharing the same internal hardware ADC.

I used a Teensy 3.6 pinout card image from the forums which was edited to showing which pins use which ADC.

https://forum.pjrc.com/threads/34808-K66-Beta-Test?p=114102&viewfull=1#post114102

I am working on an extended version that sends the data to an ESP8266 via SPI, which then sends it over WiFi to a PC.

After that, I forsee bringing an ESP32 into the mix.

A lot of the code for extra features on the Processing sketch is commented out, to make it run faster.

I will add a full featured Processing sketch shortly, with all the bells and whistles turned on.

I will be happy to answer questions and tweak things as needed.

Last edited: