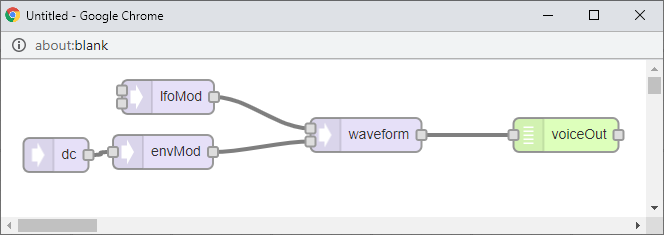

I am trying to create a reusable class synth that would build the connection between my different audio objects. But when I try to instance the AudioConnection within this class it doesn't work :-/ (seem I reached my knowledge in C++  )

)

I actually beleive that I could even create a new audio object like describe here https://www.pjrc.com/teensy/td_libs_AudioNewObjects.html but I have even less an idea about how to do this in order to connect all those sub audio object.

I am a bit lost and I would love to get some help to find my way.

Code:

AudioOutputMQS audioOut;

Synth synth{&audioOut};

class Synth {

protected:

public:

AudioConnection patchCord01;

AudioConnection patchCord02;

AudioConnection patchCord03;

AudioConnection patchCord05;

AudioConnection patchCord06;

AudioSynthWaveformDc dc;

AudioEffectEnvelope envMod;

AudioSynthWaveformModulated lfoMod;

AudioSynthWaveformModulated waveform;

AudioEffectEnvelope env;

byte currentWaveform = 0;

float attackMs = 0;

float decayMs = 50;

float sustainLevel = 0;

float releaseMs = 0;

float frequency = 440;

float amplitude = 1.0;

Synth(AudioStream* audioDest)

: patchCord01(lfoMod, waveform),

patchCord02(dc, envMod),

patchCord03(envMod, 0, waveform, 1),

patchCord05(waveform, env),

patchCord06(env, *audioDest) {

waveform.frequency(frequency);

waveform.amplitude(amplitude);

waveform.arbitraryWaveform(arbitraryWaveform, 172.0);

waveform.begin(WAVEFORM_SINE);

lfoMod.frequency(1.0);

// lfoMod.amplitude(0.5);

lfoMod.amplitude(0.0);

lfoMod.begin(WAVEFORM_SINE);

env.attack(attackMs);

env.decay(decayMs);

env.sustain(sustainLevel);

env.release(releaseMs);

env.hold(0);

env.delay(0);

dc.amplitude(0.5);

envMod.delay(0);

envMod.attack(200);

envMod.hold(200);

envMod.decay(200);

envMod.sustain(0.4);

envMod.release(1500);

}

void noteOn() {

envMod.noteOn();

lfoMod.phaseModulation(0);

env.noteOn();

}

void noteOff() {

env.noteOff();

envMod.noteOff();

}

// and some more function to control everything...

};

// Uncommenting the following code would make the whole thing working

// But i want the connection happening within the synth class

//AudioConnection patchCord01(synth.lfoMod, synth.waveform);

//AudioConnection patchCord02(synth.dc, synth.envMod);

//AudioConnection patchCord03(synth.envMod, 0, synth.waveform, 1);

//AudioConnection patchCord05(synth.waveform, synth.env);

//AudioConnection patchCord06(synth.env, audioOut);I actually beleive that I could even create a new audio object like describe here https://www.pjrc.com/teensy/td_libs_AudioNewObjects.html but I have even less an idea about how to do this in order to connect all those sub audio object.

I am a bit lost and I would love to get some help to find my way.