Good Morning all,

Lots of posts since I last responded. Luckily most of the things I would mention, Mike has already mentioned

But will mention a few, plus a few more background/I don't understand like comments.

Paul (and others): Some basic understandings of USB stuff, like:

Bulk Transfers:

a) T4 will try to use High speed (512), T3.x will use full speed (64 byte). Note: if T4 connects at full speed it will also use 64 byte packets. Need a setup to force T4 to full speed to see if any differences? Note: I did add some code to cores and MTP code to not always assume 512 bytes, but I know probably missed some.

b) How big is the bulk transfer? On T4x on Windows WireShark made it look like it was receiving 127 packets followed by 1, by 127, 1, ... for SendObject, where Ubuntu looked like 32... Have not looked if that is different on T3.6 with 64 byte packets. Also don't understand if that is the Host saying I want these sizes? Does the Teensy have any say? Is this a real thing or only how WireShark interprets/reports the data?

MTP Timeouts: (some could be under bulk... as well)

a) Send Object What criteria does MTP use to fail/timeout? I don't think it is the total time of the operation, as Mike mentioned he was able to receive the large file on T3.x that took forever. But instead I think it is not seeing enough progress in receiving the data. But I don't know what I mean here. But have experimented a few different ways:

1) Make T4 act like T3... Which is receive a packet, write the data out, receive the next... There is a version in the code (//#define T4_USE_SIMPLE_SEND_OBJECT) which still times out and runs real slow.

2) Do reads in the background while a write operation is in progress. (In the branch throwing_darts) Start up an interval timer, that runs maybe every 15ms and if room in second output buffer it reads in the next... So as to try to keep it busy... But this was still timing out. Was experimenting with larger fifo buffer, where maybe we try to read up to 10K of data ahead... But that brings up other issues. ...

But again how does it decide to fail? That is for example with Ubuntu code for playing with RawHID 512 bytes and trying to make a version that uses BULK transfers. The host code, might call usb_bulk_write(hid->usb, hid->ep_out, buf, len, timeout);

So for example if I am going to send a 3mb file, is it likely that they are calling something like this, in the case of Ubuntu with a 16kb buffer (32*512), and to avoid MTP failing we need to progress fast enough to read in each chunk of 16K? Windows worse: As bigger transfers? Again is there any way to negotiate this?

b) Responding to commands, timeouts - If we don't respond to a message fast enough, MTP will abort on the PC and require something like a reboot of the teensy.

1) SendObject - again like before, but if for example if we did a large cache to read everything in, and then continued to write the data out, MTP will fail if we don't send the response packet within what it thinks is a reasonable time.

2) Startup - Code in place now that starts an interval timer, to respond to a few packets, and then respond with we are busy. That is why sometimes the MTP disk will show up on the PC but be empty unless you do a refresh... Otherwise it just simply failed

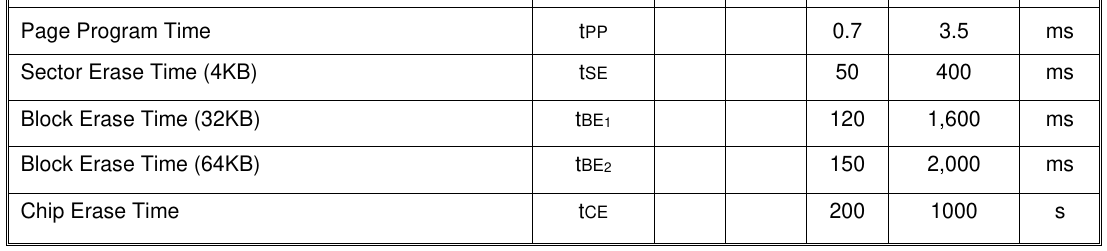

3) CopyObject/MoveObject - If asked to copy a file from one of our storage to another and that takes too long, MTP will quit... I have not done anything on this one other than verify it is an issue. For example if we copy a 3mb bitmap to a littleFs and each 4k we had to erase and each took an average of 50ms, than this operation might easily take over 30 seconds. For sure MTP would timeout.

So again need to probably do Interval timer that after a certain time, it responds back to host, probably saying OK, then it needs to respond to query messages, with we are busy, and then when copy is done, it would need to then send an event saying the object has changed...

...

Background/House Keeping - @defragster formatUnused...

That was the reason in the post about possible FS changes about maybe some method like (idle, loop), that a sketch could call, when it has nothing else to do, that maybe each time called, the LittleFS, might erase one sector if it knows it has dirty ones...

Probably enough for this post

Sorry for the book!

EDIT: The Bulk transfers on Windows for SendObject alternated 261659 bytes (511 512 byte packets plus overhead I think) and 539...