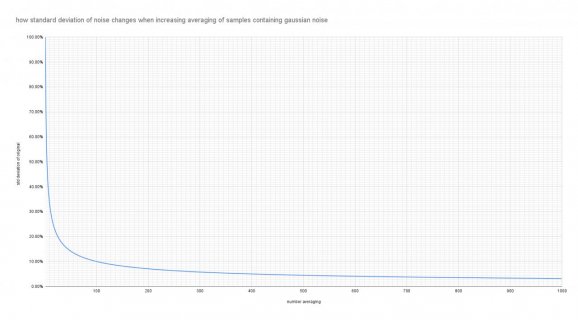

I was curious to see how accurate the 12-bit ADC is on the Teensy 4.x. The pjrc product page suggests there is some limitation ("The hardware allows up to 12 bits of resolution, but in practice only up to 10 bits are normally usable due to noise") but I wanted to see if more refinement was possible by repeated samples and averaging, and how the noise distribution looked.

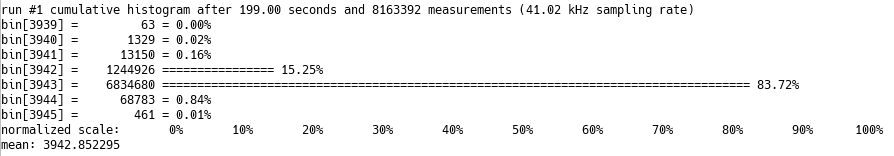

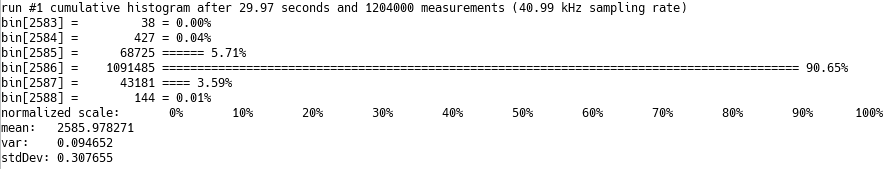

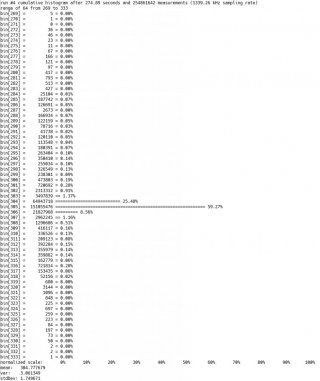

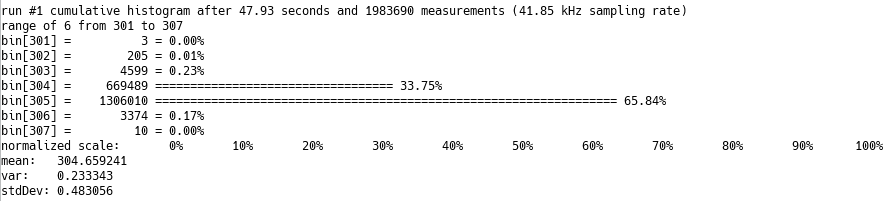

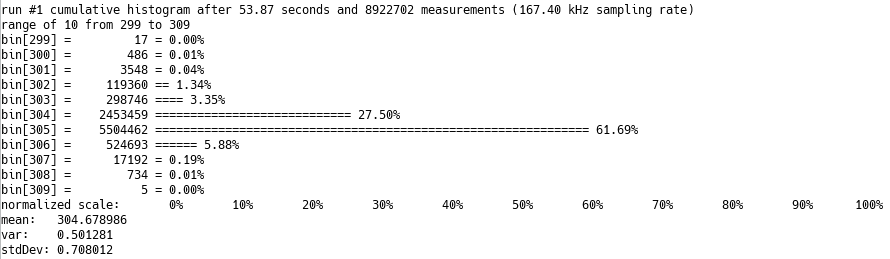

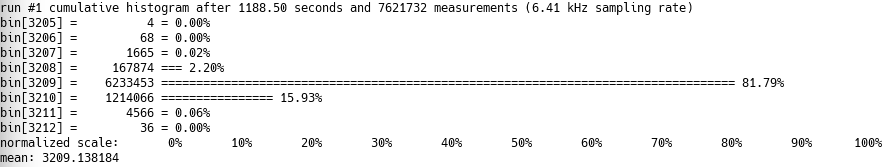

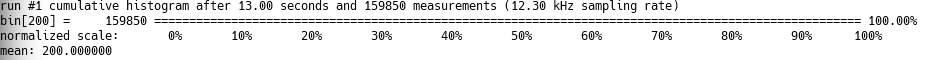

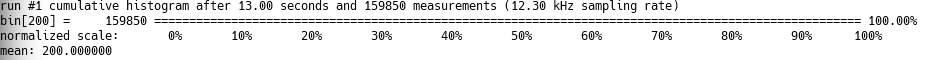

So I wrote a simple program to print out the histogram of measurements. Here is an example measuring a 5k pot at some position between 3.3v and ground, using analogReadBitDepth=12 and analogReadAveragingNum=32:

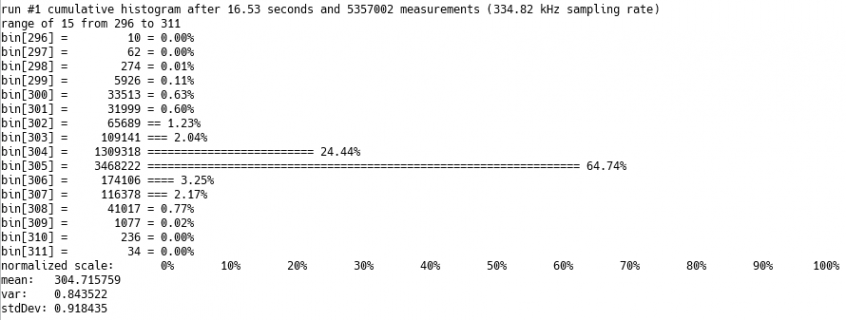

And here is an animation of the first 15 seconds:

The accuracy of the mean seems to improve after time. (I might want to investigate more to see how quickly it converges to a specified degree of accuracy.) The noise looks roughly like it could be gaussian on first glance.

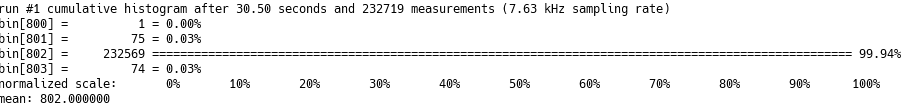

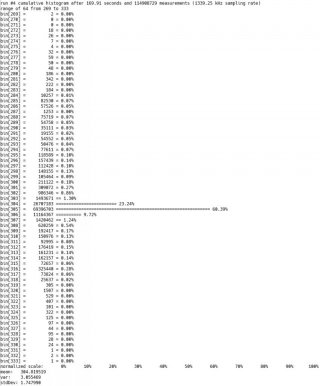

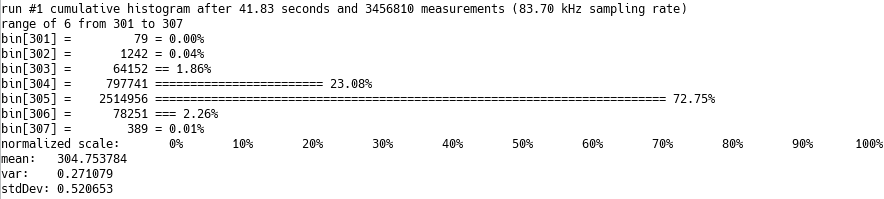

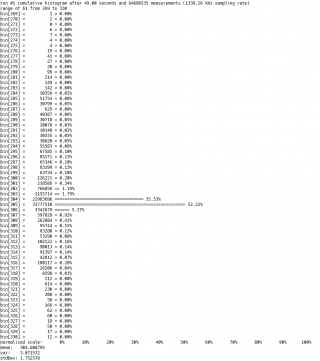

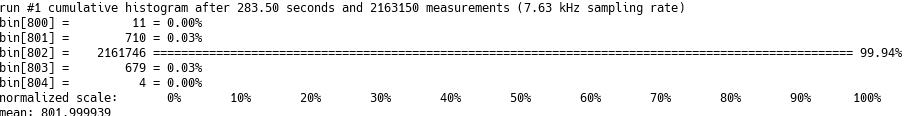

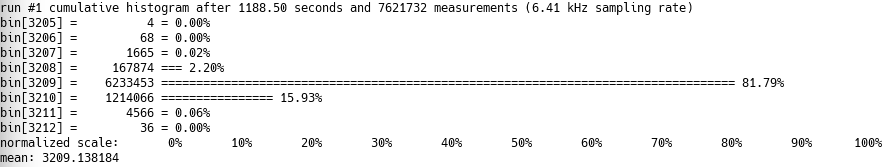

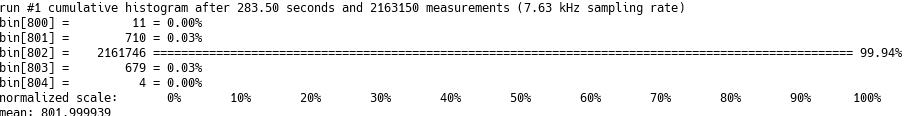

Turns out the analogRead function has a limited sampling rate...cause it seems at 12-bits I was only able to sample at 6.41 kHz (regardless of the value I used for analogReadAveragingNum). I don't know if using the advance ADC library or trying to handcode something will improve it, or if there is some hardware limit with this (( did peek at the teensy core github repo and see the function waits for some condition before making another reading). With analogReadBitDepth=10 I got 7.63 kHz sampling rate:

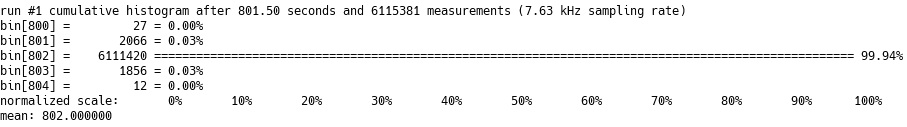

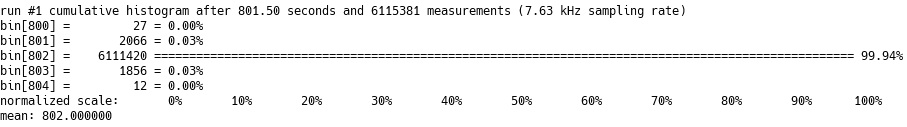

and after even more time:

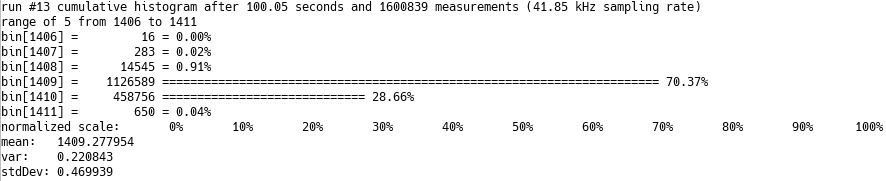

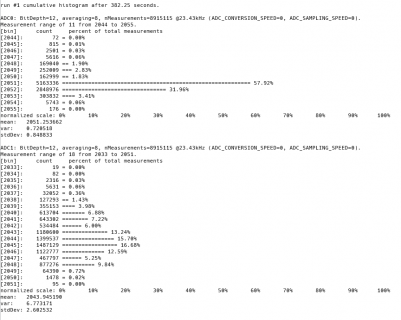

As expected considering the note that "in practice only up to 10 bits are normally usable due to noise", turns out there is much less variation, though there still are some deviations probably also due to noise. With 8 bits only, the results are almost always exactly on one value or another without only the occasional variation:

Here's my code: https://github.com/ericfont/teensy_...a74b2aec5b416b4fc90/histogram_measure_ADC.ino.

Note that pressing a push button on pin 0 connected to ground will trigger an interrupt to reset historgram to clear its data to start a new run of collecting samples. So if you want too change your pot position and gather new samples, then wire up a push button to pin 0 connected to ground, and press that push button after changing the pot (or whatever analog value you want to read).

If anyone knows techniques for increasing sampling rate, I might be curious. According to https://forum.pjrc.com/threads/25532-ADC-library-with-support-for-Teensy-4-3-x-and-LC there is a "Continuous" mode and a "ContinuousDifferential" mode which seems interesting...gonna look into now.

So I wrote a simple program to print out the histogram of measurements. Here is an example measuring a 5k pot at some position between 3.3v and ground, using analogReadBitDepth=12 and analogReadAveragingNum=32:

And here is an animation of the first 15 seconds:

The accuracy of the mean seems to improve after time. (I might want to investigate more to see how quickly it converges to a specified degree of accuracy.) The noise looks roughly like it could be gaussian on first glance.

Turns out the analogRead function has a limited sampling rate...cause it seems at 12-bits I was only able to sample at 6.41 kHz (regardless of the value I used for analogReadAveragingNum). I don't know if using the advance ADC library or trying to handcode something will improve it, or if there is some hardware limit with this (( did peek at the teensy core github repo and see the function waits for some condition before making another reading). With analogReadBitDepth=10 I got 7.63 kHz sampling rate:

and after even more time:

As expected considering the note that "in practice only up to 10 bits are normally usable due to noise", turns out there is much less variation, though there still are some deviations probably also due to noise. With 8 bits only, the results are almost always exactly on one value or another without only the occasional variation:

Here's my code: https://github.com/ericfont/teensy_...a74b2aec5b416b4fc90/histogram_measure_ADC.ino.

Note that pressing a push button on pin 0 connected to ground will trigger an interrupt to reset historgram to clear its data to start a new run of collecting samples. So if you want too change your pot position and gather new samples, then wire up a push button to pin 0 connected to ground, and press that push button after changing the pot (or whatever analog value you want to read).

If anyone knows techniques for increasing sampling rate, I might be curious. According to https://forum.pjrc.com/threads/25532-ADC-library-with-support-for-Teensy-4-3-x-and-LC there is a "Continuous" mode and a "ContinuousDifferential" mode which seems interesting...gonna look into now.

Attachments

Last edited: