Hi there,

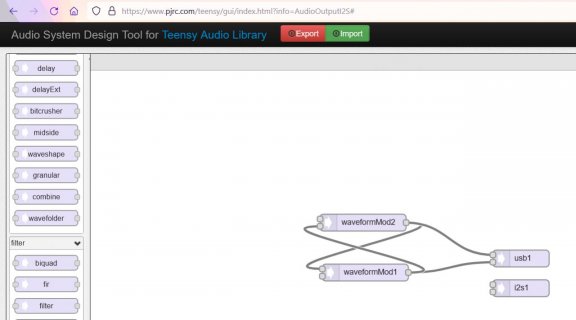

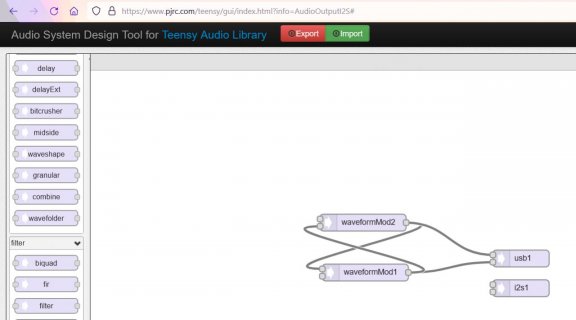

I'd like to understand how block processing happens at a high level when the audio "graph" contains loops, as in the case below (waveform2 gets fm from waveform1, waveform1 gets pwm from waveform2). Given that the Audio library processes AUDIO_BLOCK_SAMPLES at a time, I see the following options:

If it's 2. are there some rules I might understand?

I'd like to understand how block processing happens at a high level when the audio "graph" contains loops, as in the case below (waveform2 gets fm from waveform1, waveform1 gets pwm from waveform2). Given that the Audio library processes AUDIO_BLOCK_SAMPLES at a time, I see the following options:

- always use data that lags by AUDIO_BLOCK_SAMPLES (each waveform uses the "cached" result of the previous block processing)

- arbitrarily (or otherwise by some set of rules), break the cycle and process (say) waveform1 then use the result for waveform2

- something else?

If it's 2. are there some rules I might understand?

Code:

#include <Audio.h>

#include <Wire.h>

#include <SPI.h>

#include <SD.h>

#include <SerialFlash.h>

// GUItool: begin automatically generated code

AudioSynthWaveformModulated waveformMod2; //xy=491.20001220703125,327

AudioSynthWaveformModulated waveformMod1; //xy=495.20001220703125,416

AudioOutputUSB usb1; //xy=772.2000122070312,391

AudioOutputI2S i2s1; //xy=772.2000122070312,444

AudioConnection patchCord1(waveformMod2, 0, waveformMod1, 0);

AudioConnection patchCord2(waveformMod2, 0, usb1, 0);

AudioConnection patchCord3(waveformMod1, 0, usb1, 1);

AudioConnection patchCord4(waveformMod1, 0, waveformMod2, 1);

// GUItool: end automatically generated code

// the setup routine runs once when you press reset:

void setup() {

AudioMemory(256);

int masterVolume = 1;

waveformMod1.begin(WAVEFORM_SQUARE);

waveformMod2.begin(WAVEFORM_PULSE);

waveformMod1.offset(1);

waveformMod1.amplitude(masterVolume);

waveformMod2.amplitude(masterVolume);

}

// the loop routine runs over and over again forever:

void loop() {

float knob_1 = 0.2;

float knob_2 = 0.8;

float pitch1 = pow(knob_1, 2);

// float pitch2 = pow(knob_2, 2);

waveformMod1.frequency(10 + (pitch1 * 50));

waveformMod2.frequency(10 + (knob_2 * 200));

waveformMod1.frequencyModulation(knob_2 * 8 + 3);

}