This is an idea and code I've been working on for a while now, and its starting to get close to

completion, so I thought I'd highlight the kind of performance I'm seeing and the approach and

tricks used.

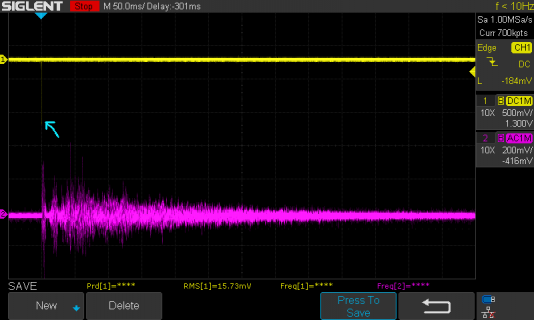

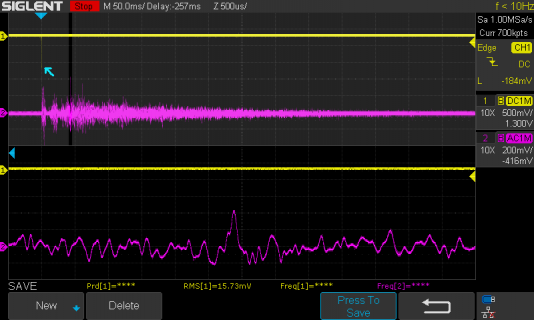

I've a 66161 sample (1.5s) impulse response on a 16bit WAV file on an SDcard. This is read in to configure

my class. This uses 404kB of dynamically allocated memory (DMAMEM I presume) in addition to

fixed buffers of 64kB in the class. This fits on a standard T4.

The algorithm uses two levels of uniformly-partitioned convolution spliced together, one that

processes the 128-sample audio blocks individually (achieving the 1 block latency), and one

that processes blocks of 2048 samples (16 times larger). There are 32 128-blocks handled

by tier1. And there are 31 2k-blocks handled by tier2 for this particular file. As tier2 only

needs to update every 46.4ms, this substantially reduces the processor cycles needed.

The tier2 processing is amortized over 16 consecutive update() calls to keep the CPU workload

even.

The result: I see about 28 to 35% ProcessorUsage on a T4.0 at 600MHz, which is very usable. (with

a single-tier partitioned convolution I could only get to 20k taps or so before the CPU usage started

to max-out)

This 35% is at 44100 sample rate at 66161 taps, mono. If this could be done in the time domain it

would only allow 0.34ns per multiply-accumulate operation.

One trick is to store the fft data as q15_t or q7_t arrays, despite the convolution processing all being

floating point, as this immediately reduces memory requirements a factor of 2 or more. The latter part of

the impulse response handled by tier2 uses only q7 storage for its filter fft data, using a per-block float

scaling factor to minimize quantization errors. This does entail some extra processing to re-scale however.

The sample fft data is kept at 16 bit in q15_t arrays, as this represents the signal itself. You can accept a

lot more raggedness in representing a reverberation impulse than the signal its modulating, I figure, especially

for the tail of the impulse response which is much lower in amplitude typically. The first 46ms of the impulse

response are entirely from the first tier at 16 bit resolution, thereafter it merges into the second tier with its

8-bit resolution.

Another trick is to use the real FFT primitives in CMSIS which use half the memory footprint for real

input (*)

This prevents the trick of coding two channels into the real and imaginary slots of a full complex FFT,

but I'm aiming for the most taps I can get.

The factor of 16 between the tiers is configurable, 16 seems to perform best for the larger impulse

responses - you want roughly equal block counts in the tiers for best throughput I think.

(*) actually this is only true for the arm_rfft_fast_f32 call, the others return a full spectrum.

completion, so I thought I'd highlight the kind of performance I'm seeing and the approach and

tricks used.

I've a 66161 sample (1.5s) impulse response on a 16bit WAV file on an SDcard. This is read in to configure

my class. This uses 404kB of dynamically allocated memory (DMAMEM I presume) in addition to

fixed buffers of 64kB in the class. This fits on a standard T4.

The algorithm uses two levels of uniformly-partitioned convolution spliced together, one that

processes the 128-sample audio blocks individually (achieving the 1 block latency), and one

that processes blocks of 2048 samples (16 times larger). There are 32 128-blocks handled

by tier1. And there are 31 2k-blocks handled by tier2 for this particular file. As tier2 only

needs to update every 46.4ms, this substantially reduces the processor cycles needed.

The tier2 processing is amortized over 16 consecutive update() calls to keep the CPU workload

even.

The result: I see about 28 to 35% ProcessorUsage on a T4.0 at 600MHz, which is very usable. (with

a single-tier partitioned convolution I could only get to 20k taps or so before the CPU usage started

to max-out)

This 35% is at 44100 sample rate at 66161 taps, mono. If this could be done in the time domain it

would only allow 0.34ns per multiply-accumulate operation.

One trick is to store the fft data as q15_t or q7_t arrays, despite the convolution processing all being

floating point, as this immediately reduces memory requirements a factor of 2 or more. The latter part of

the impulse response handled by tier2 uses only q7 storage for its filter fft data, using a per-block float

scaling factor to minimize quantization errors. This does entail some extra processing to re-scale however.

The sample fft data is kept at 16 bit in q15_t arrays, as this represents the signal itself. You can accept a

lot more raggedness in representing a reverberation impulse than the signal its modulating, I figure, especially

for the tail of the impulse response which is much lower in amplitude typically. The first 46ms of the impulse

response are entirely from the first tier at 16 bit resolution, thereafter it merges into the second tier with its

8-bit resolution.

Another trick is to use the real FFT primitives in CMSIS which use half the memory footprint for real

input (*)

This prevents the trick of coding two channels into the real and imaginary slots of a full complex FFT,

but I'm aiming for the most taps I can get.

The factor of 16 between the tiers is configurable, 16 seems to perform best for the larger impulse

responses - you want roughly equal block counts in the tiers for best throughput I think.

(*) actually this is only true for the arm_rfft_fast_f32 call, the others return a full spectrum.