Nuclear_Man_D

New member

Hello,

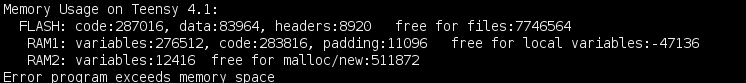

I am attempting to run an incredibly large application on the Teensy 4.1. Most of the code was originally compiled for Linux, and so it never was forced to be small enough to run on any, not even the largest, microcontrollers. Normally, this would be because the program uses many globals with high RAM usage, or because the application isn't large enough to fit in FLASH, but here neither are true. Interestingly, this program is using more than 280k in the RAM1 bank for code alone:

My question is, what part of my code is using this RAM? My hypothesis is vtables, since I have tons of classes, some of which have a lot of abstract methods. The thing is, I know from looking at the T4.1's core source code that it already has a lot of classes in it, and to my knowledge those classes don't occupy this much RAM. It seems like if this data could be moved to flash, I'd have plenty of space for it, since I'm using hardly any of the flash capacity (relatively speaking). Also, is this sort of RAM1 usage for code normal?

I should probably note my OS, optimization settings, and other details of my configuration, if anything at least for context:

- I am running Linux Mint (uname -a: "Linux nuclaer-machine 5.15.0-89-generic #99-Ubuntu SMP Mon Oct 30 20:42:41 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux")

- Arduino 1.8.19 with TeensyDuino 1.58. Compiling through Arduino, no CMake or Makefile.

- Currently only testing with an SD card connected. I will connect other hardware later but there is no wiring here to be related to the RAM usage.

- Optimization setting is set at "Faster". "Fastest" makes the memory consumption far worse, "Fast" does not improve RAM, and "Smallest Code" prevents the Teensy4.1 from booting as I discuss later in this post. "Debug" does not compile.

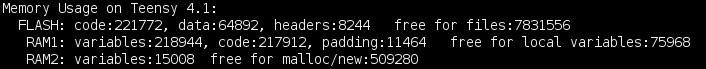

The sketch is broken into three parts: The small part that I edit in the Arduino editor, the OS I wrote for Arduino a few years ago, and the new application code I added. I don't want to share that application code for various reasons, but the OS part utilizing a very large amount of the space is located at my gitlab server here: https://git.nuclaer-servers.com/Nuclaer/ntios-2020. If I run a nearly identical setup with the application code removed, the RAM code usage is still high, so I know that the OS portion is using a huge amount of this RAM1 code space:

Given that I do have a style of programming reflected in both the application and the OS code, I'd think it's likely that whatever is eating up this code block in the OS is the same mechanism for the application code. The compilation results also seem to indicate that the OS is using more of this block.

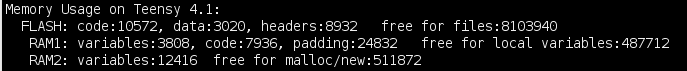

For comparison, this is the utilization of the ASCIITable example sketch:

To confirm, I have moved to PROGMEM the few large global arrays I had, namely three icons and two pixel fonts. I saw a drop in RAM1 usage, but it wasn't enough. Nonetheless, I don't think that would be considered code memory in RAM1, so I don't think it really has much to do with the question in this post.

I want to mention again that I couldn't get the "smallest code" optimization option to run on Teensy 4.1, even for the ASCIITable example sketch, so it is not my code at fault. The Teensy, after being flashed with such code, will not connect over USB and cannot be flashed automatically; the button to manually initiate flashing must be pressed. This is really beyond the scope of this post, and deserves it's own thread, but I wanted to mention it. "Debug" optimization mode also did not compile, but I did not investigate it much.

Anyway, back to the original question: What in my code is using so much space in RAM1? Furthermore, how can I move it to flash, even if this reduces my execution speed?

More generally, what specific parts of our compiled code (vtables, for example) go to which RAM and flash blocks? This is vaguely described in many places, but I'm referring to the more specific and intense technical details.

I am attempting to run an incredibly large application on the Teensy 4.1. Most of the code was originally compiled for Linux, and so it never was forced to be small enough to run on any, not even the largest, microcontrollers. Normally, this would be because the program uses many globals with high RAM usage, or because the application isn't large enough to fit in FLASH, but here neither are true. Interestingly, this program is using more than 280k in the RAM1 bank for code alone:

My question is, what part of my code is using this RAM? My hypothesis is vtables, since I have tons of classes, some of which have a lot of abstract methods. The thing is, I know from looking at the T4.1's core source code that it already has a lot of classes in it, and to my knowledge those classes don't occupy this much RAM. It seems like if this data could be moved to flash, I'd have plenty of space for it, since I'm using hardly any of the flash capacity (relatively speaking). Also, is this sort of RAM1 usage for code normal?

I should probably note my OS, optimization settings, and other details of my configuration, if anything at least for context:

- I am running Linux Mint (uname -a: "Linux nuclaer-machine 5.15.0-89-generic #99-Ubuntu SMP Mon Oct 30 20:42:41 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux")

- Arduino 1.8.19 with TeensyDuino 1.58. Compiling through Arduino, no CMake or Makefile.

- Currently only testing with an SD card connected. I will connect other hardware later but there is no wiring here to be related to the RAM usage.

- Optimization setting is set at "Faster". "Fastest" makes the memory consumption far worse, "Fast" does not improve RAM, and "Smallest Code" prevents the Teensy4.1 from booting as I discuss later in this post. "Debug" does not compile.

The sketch is broken into three parts: The small part that I edit in the Arduino editor, the OS I wrote for Arduino a few years ago, and the new application code I added. I don't want to share that application code for various reasons, but the OS part utilizing a very large amount of the space is located at my gitlab server here: https://git.nuclaer-servers.com/Nuclaer/ntios-2020. If I run a nearly identical setup with the application code removed, the RAM code usage is still high, so I know that the OS portion is using a huge amount of this RAM1 code space:

Given that I do have a style of programming reflected in both the application and the OS code, I'd think it's likely that whatever is eating up this code block in the OS is the same mechanism for the application code. The compilation results also seem to indicate that the OS is using more of this block.

For comparison, this is the utilization of the ASCIITable example sketch:

To confirm, I have moved to PROGMEM the few large global arrays I had, namely three icons and two pixel fonts. I saw a drop in RAM1 usage, but it wasn't enough. Nonetheless, I don't think that would be considered code memory in RAM1, so I don't think it really has much to do with the question in this post.

I want to mention again that I couldn't get the "smallest code" optimization option to run on Teensy 4.1, even for the ASCIITable example sketch, so it is not my code at fault. The Teensy, after being flashed with such code, will not connect over USB and cannot be flashed automatically; the button to manually initiate flashing must be pressed. This is really beyond the scope of this post, and deserves it's own thread, but I wanted to mention it. "Debug" optimization mode also did not compile, but I did not investigate it much.

Anyway, back to the original question: What in my code is using so much space in RAM1? Furthermore, how can I move it to flash, even if this reduces my execution speed?

More generally, what specific parts of our compiled code (vtables, for example) go to which RAM and flash blocks? This is vaguely described in many places, but I'm referring to the more specific and intense technical details.