For my TCD1304DG experiments I am testing the speeds of the ADC measurements.

I have now used the simplest ADC method, analogRead inside the void loop.

I was surprised that the processor clock rate has almost no influence on the sampling frequency.

I have a toggle output for my osci before and after the read command. The time difference is only about 145 ns . This surprises me, because the repetition frequency of the loop is about 55 KHz.

So the processor uses its time with "more important" things instead of serving me. Even if I insert a Serial.println(val) output into the loop, its frequency hardly changes.

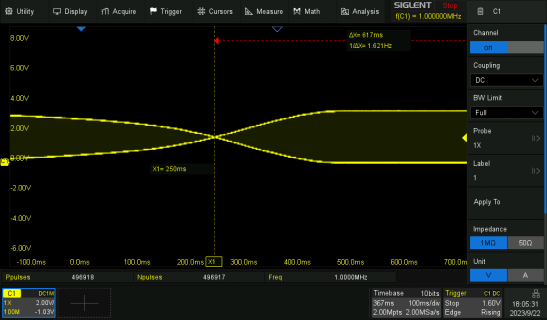

However, the serial output disturbs the timing every now and then, and the time difference increases sometimes from 145 to 222 ns.

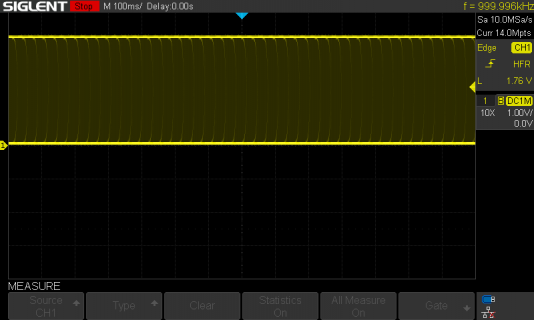

I don't like this jitter so much. Actually, I want to couple a screen directly later to display my measured values (a spectrogram). Let's see how much time this will take. By the way, without analogRead the loop will run at 7.4 MHz. The time difference of the signals is then still 135 ns.

Do you think that another ADC method would be better?

How do I know that the ADC is already done with its measurement? Does the analogRead command wait or does it just show the last measurement?

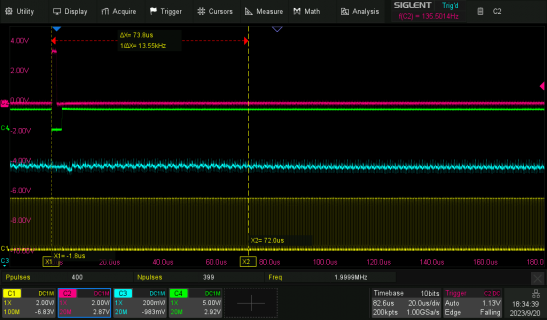

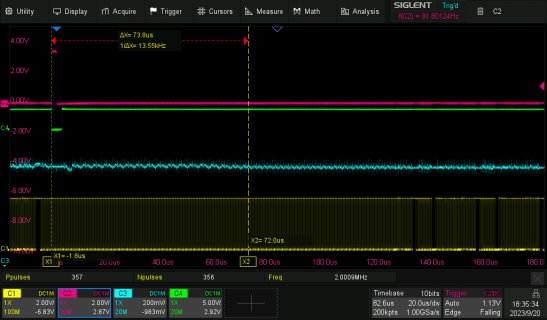

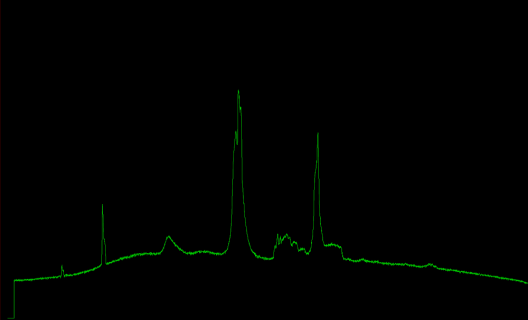

In the oscillogram you can see the two output signals, which are output before and after the ADC measurement (red and yellow). Their time difference of 145 ns would then be the time needed by the ADC. The actual frequency of the signals is the time the whole loop needs.

Since I toggle at each loop pass, the loop is of course twice as fast. Below in the screenshot you can see some statistics and histograms.

I have now used the simplest ADC method, analogRead inside the void loop.

I was surprised that the processor clock rate has almost no influence on the sampling frequency.

I have a toggle output for my osci before and after the read command. The time difference is only about 145 ns . This surprises me, because the repetition frequency of the loop is about 55 KHz.

So the processor uses its time with "more important" things instead of serving me. Even if I insert a Serial.println(val) output into the loop, its frequency hardly changes.

However, the serial output disturbs the timing every now and then, and the time difference increases sometimes from 145 to 222 ns.

I don't like this jitter so much. Actually, I want to couple a screen directly later to display my measured values (a spectrogram). Let's see how much time this will take. By the way, without analogRead the loop will run at 7.4 MHz. The time difference of the signals is then still 135 ns.

Do you think that another ADC method would be better?

How do I know that the ADC is already done with its measurement? Does the analogRead command wait or does it just show the last measurement?

Code:

constexpr int fMPin = 3;

constexpr int SH = 5;

void setup()

{

//Serial.begin(38400);

pinMode(fMPin, OUTPUT);

pinMode(SH, OUTPUT);

digitalWriteFast(fMPin,HIGH);

digitalWriteFast(SH,LOW);

}

int val;

void loop()

{

digitalToggleFast(SH);

val = analogRead(0);

//Serial.print("analog 0 is: ");

//Serial.println(val);

digitalToggleFast(fMPin);

}In the oscillogram you can see the two output signals, which are output before and after the ADC measurement (red and yellow). Their time difference of 145 ns would then be the time needed by the ADC. The actual frequency of the signals is the time the whole loop needs.

Since I toggle at each loop pass, the loop is of course twice as fast. Below in the screenshot you can see some statistics and histograms.