CyborgEars

Active member

Hello all,

We would like to present you an extensive audio hardware project that we are currently working on, and some of the new ground we've explored relating to teensy audio capabilities.

Our goal

We aim to augment 'normal' human hearing with digitally processed realtime audio recordings.

Concept: we have an audio DSP, audio CODEC and headphone amplifier chip that

plays the audio captured by a pair of small microphones to regular off the shelf in-ear headphones.

Unlike other designs, the microphones are not statically attached to the headphones, more on this in a later post.

Who we are

Chris & Johannes, two Master of Science students from Germany.

Our implementation

We use the teensy 3.1 microcontroller and the teensy audio shield which contains the SGTL5000 audio chip and a limited DSP.

Recording is done by two tiny 24-bit ICS-43432 MEMS microphone on breakout boards (Overview, datasheet) that output sound to a single stereo I2S audio line.

Top view on wearable experimental configuration of:

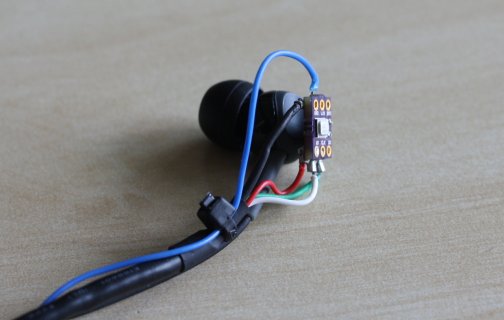

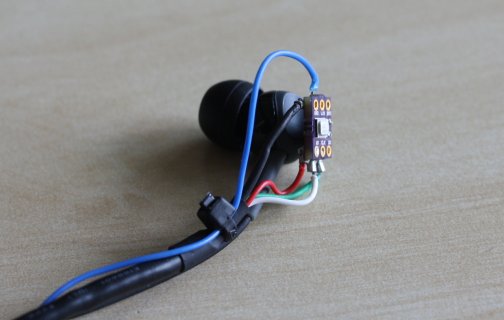

This picture shows a close up for size comparison:

Motivations leading to this project

Hearing aids have been socially accepted 'augmented hearing' devices for a long time, but:

Simulated hearing experience

Although we were not completely aware of this fact at the beginning of our project, our area of research is closely linked to the topic of Binaural recordings, where stereo sound recording is done at the ears of real or synthetic heads, with the goal to capture the authentic way the sound would reach a spectator's ears.

In contrast to this, the ability to record is not as important for us. While our platform could later be modified to record the sounds captured by our microphones to an SD card or computer, our primary goal is focused on the realtime experience. (This is partly due to our planned signal path. Also, there are many commercial products already aimed at binaural recording)

That said, binaural recordings are a good way to demonstrate the effects of an altered hearing experience and get into the topic of how immersive sound can be.

If you would like to try this, put some headphones on and listen to this binaural example from wikimedia.

Imagine yourself actually crumbling this paper in real life, but hearing it with swapped "ear"-sides (left ear recordings go to the right, and vice versa) - swapping sides is one of the easiest and at the same time most impressive ways to alter our hearing. It's also one of many effects that do not actually improve your hearing, but invites you to explore things we take for granted in our daily life.

Available DSP & mixer capablilites

As the SGTL5000 DSP was (probably) designed for media devices like smartphones, it's DSP features are very limited, and only include

As far as we know, the chip is also unable to do mixing of the microphone audio with sound from the line-in input channel, although there might be interesting application where it is enough to switch between them (listening to music on the train in one minute, talking to the train conductor in the next)

Implementation story, part 1

Achieving the working system we have now was not so straightforward:

As you may know, the I2S block of the ARM Cortex M4 used in the teensy 3.0/3.1 has a separate I2S stereo receive and a I2S stereo transmit channel, so our initial plan was based on receiving the microphone data on the teensy with the regular audio library created by Paul, doing some sound processing/mixing, and then transmitting the results to the audio shield via the I2S TX. There, the DSP could optionally apply some changes and then finally deliver the audio to the in-ear headphones.

This way, we'ld limit ourselves to a one way street of mics -> teensy processing -> teensy shield processing -> headphones, but could mix in e.g. synthesized sounds or any other teensy DSP function.

Unfortunately, the microphones provide fixed 24-bit audio data, while the internal ARM Cortex M4 DSP instructions are limited to 16 bit on a hardware level. For this reason, Paul had previously decided to hard-code his audio processing library for 16-bit sound data only (!), and we were unable to use or adapt it due to the low-level DSP instructions that are used throughout the library, as we decided against downconverting the audio to 16 bit for reasons of complexity, speed and audio quality.

Our current solution is to directly connect the microphones to the teensy audio shields I2S RX channel, which reduces the teensy to I2C control in theory.

('Passively' receiving the same microphone data on the teensy I2S RX is still an option, but not used. The actual I2S configuration is basically a hack and more complicated as explained here, but more on that later.)

To achieve direct microphone->audio shield input, we've successfully switched the SGTL5000 CODEC configuration to accept stereo 24 bit of audio data (in 32-bit I2S dataframes) at 48kHz sampling rate. (Choosing 48kHz over the regular 44.1kHz was a voluntary step and not necessary for the signal path)

[Update]

To clarify: our current signal path looks like this:

2x I2S mics -> teensy shield w/ SGTL5000 -> stereo headphones

##

So much for now. Please note: we're quite busy developing and might not be able to reply in time.

We would like to present you an extensive audio hardware project that we are currently working on, and some of the new ground we've explored relating to teensy audio capabilities.

Our goal

We aim to augment 'normal' human hearing with digitally processed realtime audio recordings.

Concept: we have an audio DSP, audio CODEC and headphone amplifier chip that

plays the audio captured by a pair of small microphones to regular off the shelf in-ear headphones.

Unlike other designs, the microphones are not statically attached to the headphones, more on this in a later post.

Who we are

Chris & Johannes, two Master of Science students from Germany.

Our implementation

We use the teensy 3.1 microcontroller and the teensy audio shield which contains the SGTL5000 audio chip and a limited DSP.

Recording is done by two tiny 24-bit ICS-43432 MEMS microphone on breakout boards (Overview, datasheet) that output sound to a single stereo I2S audio line.

Top view on wearable experimental configuration of:

- teensy

- audio shield

- special ICS-43432 microphone pcbs

- custom black-blue audio data cable

- headphones

- usb-battery

This picture shows a close up for size comparison:

Motivations leading to this project

Hearing aids have been socially accepted 'augmented hearing' devices for a long time, but:

- What benefits could "digitally modified" hearing bring to people without hearing disablities?

- Can our senses be improved or altered in a way that is useful or pleasurable to an individual by such a system?

- Within which limits can this be used to protect the regular hearing system, e.g. at a concert?

- How does it FEEL to actually hear things from a point outside of your body?

- What emotions will be brought up by altering the way the world sounds to us?

Simulated hearing experience

Although we were not completely aware of this fact at the beginning of our project, our area of research is closely linked to the topic of Binaural recordings, where stereo sound recording is done at the ears of real or synthetic heads, with the goal to capture the authentic way the sound would reach a spectator's ears.

In contrast to this, the ability to record is not as important for us. While our platform could later be modified to record the sounds captured by our microphones to an SD card or computer, our primary goal is focused on the realtime experience. (This is partly due to our planned signal path. Also, there are many commercial products already aimed at binaural recording)

That said, binaural recordings are a good way to demonstrate the effects of an altered hearing experience and get into the topic of how immersive sound can be.

If you would like to try this, put some headphones on and listen to this binaural example from wikimedia.

Imagine yourself actually crumbling this paper in real life, but hearing it with swapped "ear"-sides (left ear recordings go to the right, and vice versa) - swapping sides is one of the easiest and at the same time most impressive ways to alter our hearing. It's also one of many effects that do not actually improve your hearing, but invites you to explore things we take for granted in our daily life.

Available DSP & mixer capablilites

As the SGTL5000 DSP was (probably) designed for media devices like smartphones, it's DSP features are very limited, and only include

- amplification (multiple stages)

- limiting sound loudness

- sound compressor

- proprietary bass enhance w/ cutoff filter

- equalizer

As far as we know, the chip is also unable to do mixing of the microphone audio with sound from the line-in input channel, although there might be interesting application where it is enough to switch between them (listening to music on the train in one minute, talking to the train conductor in the next)

Implementation story, part 1

Achieving the working system we have now was not so straightforward:

As you may know, the I2S block of the ARM Cortex M4 used in the teensy 3.0/3.1 has a separate I2S stereo receive and a I2S stereo transmit channel, so our initial plan was based on receiving the microphone data on the teensy with the regular audio library created by Paul, doing some sound processing/mixing, and then transmitting the results to the audio shield via the I2S TX. There, the DSP could optionally apply some changes and then finally deliver the audio to the in-ear headphones.

This way, we'ld limit ourselves to a one way street of mics -> teensy processing -> teensy shield processing -> headphones, but could mix in e.g. synthesized sounds or any other teensy DSP function.

Unfortunately, the microphones provide fixed 24-bit audio data, while the internal ARM Cortex M4 DSP instructions are limited to 16 bit on a hardware level. For this reason, Paul had previously decided to hard-code his audio processing library for 16-bit sound data only (!), and we were unable to use or adapt it due to the low-level DSP instructions that are used throughout the library, as we decided against downconverting the audio to 16 bit for reasons of complexity, speed and audio quality.

Our current solution is to directly connect the microphones to the teensy audio shields I2S RX channel, which reduces the teensy to I2C control in theory.

('Passively' receiving the same microphone data on the teensy I2S RX is still an option, but not used. The actual I2S configuration is basically a hack and more complicated as explained here, but more on that later.)

To achieve direct microphone->audio shield input, we've successfully switched the SGTL5000 CODEC configuration to accept stereo 24 bit of audio data (in 32-bit I2S dataframes) at 48kHz sampling rate. (Choosing 48kHz over the regular 44.1kHz was a voluntary step and not necessary for the signal path)

[Update]

To clarify: our current signal path looks like this:

2x I2S mics -> teensy shield w/ SGTL5000 -> stereo headphones

##

So much for now. Please note: we're quite busy developing and might not be able to reply in time.

Last edited: